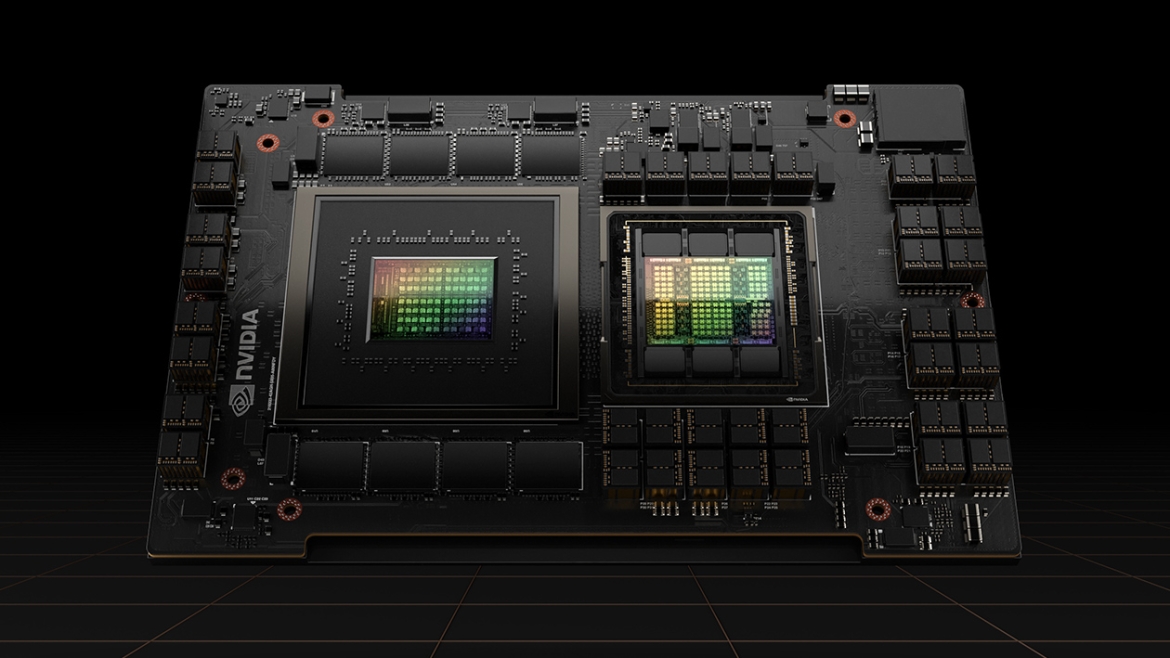

NVIDIA H100

Cutting Edge for the Data Center

The use of the NVIDIA H100 Tensor Core GPU brings a significant performance boost for accelerated computing. This results in unmatched performance, scalability, and security for any type of workload. Thanks to the NVIDIA NVLink Switch System, up to 256 H100 GPUs can be interconnected to accelerate demanding and large-scale workloads.

The NVIDIA H100 GPU's purpose-built Transformer Engine enables the processing of trillions of parameters in language models. This advanced technological innovation provides improved performance of immense language models by 30x compared to previous generations. It thus plays a leading role in conversational AI use cases.

The implementation of AI solutions has now become mainstream. Companies that want to maintain their competitiveness need such an infrastructure to effectively implement their AI-based business processes and successfully extend new processes.

Any Questions?

If you would like to know more about this subject, I am happy to assist you.

Contact us